For several years, the Cognitive Systems Lab (CSL) at the University of Bremen, the Department of Neurosurgery at Maastricht University in the Netherlands, and the ASPEN Lab at Virginia Commonwealth University (USA) have been working on a neurospeech prosthesis. The goal: to translate speech-related neural processes in the brain directly into audible speech. That goal has now been achieved:

“We have managed to make our test subjects hear themselves speak, although they only imagine speaking. The brainwave signals of volunteers who imagine speaking are directly converted into audible output by our neurospeech prosthesis — in real time without any perceptible delay!”

- Professor Tanja Schultz, Director of the CSL

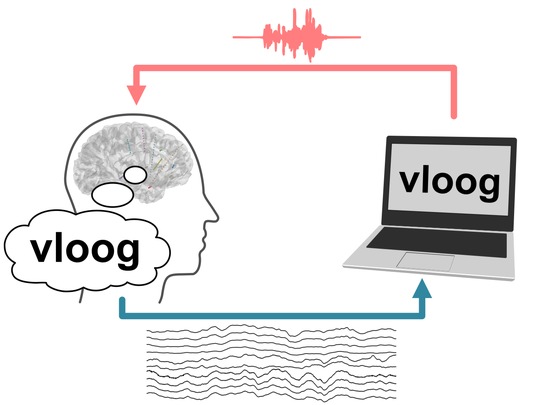

The innovative neuro-speech prosthesis is based on a closed-loop system that combines technologies from modern speech synthesis with brain-computer interfaces. This system was developed by Miguel Angrick at the CSL. It receives as input the neural signals of users who imagine speaking. Using machine learning, it transforms these into speech virtually simultaneously and outputs them audibly as feedback to the users. “This closes the loop for them from imagining speaking and hearing their speech,” Angrick said.

Study with volunteer epilepsy patient

The work, published in Nature Communications Biology, is based on a study with a volunteer epilepsy patient who had depth electrodes implanted for medical testing and was in the hospital for clinical monitoring. In the first step, the patient read texts aloud, from which the closed-loop system learned the correspondence between speech and neural activity using machine learning techniques. “In the second step, this learning process was repeated with whispered speech and with imagined speech,” explains Miguel Angrick. “In the process, the closed-loop system generated synthesized speech. Although the system had learned the correspondences on audible speech only, audible output is also produced with whispered speech and with imagined speech.” This suggests that the underlying language processes in the brain for audibly produced speech are comparable to those for whispered and imagined speech.

Important role of the Bremen Cognitive Systems Lab

“Speech neuroprosthetics aims to provide a natural communication channel for individuals who are unable to speak due to physical or neurological impairments,” says Professor Tanja Schultz, explaining the background for the intensive research activities in this field, in which the Cognitive Systems Lab at the University of Bremen plays a globally respected role. “Real-time synthesis of acoustic speech directly from measured neural activity could enable natural conversations and significantly improve the quality of life of people whose communication capabilities are severely limited.”

The groundbreaking innovation is the result of a long-standing collaboration jointly funded by the German Federal Ministry of Education and Research (BMBF) and the U.S. National Science Foundation (NSF) as part of the Multilateral Collaboration in Computational Neuroscience research program. This collaboration with Professor Dean Krusienski (ASPEN Lab, Virginia Commonwealth University) was established jointly with former CSL staff member Dr. Christian Herff as part of the successful RESPONSE (REvealing SPONtaneous Speech processes in Electrocorticography) project. It is currently being continued with CSL staff member Miguel Angrick in the ADSPEED (ADaptive Low-Latency SPEEch Decoding and synthesis using intracranial signals) project. Dr. Christian Herff is now an assistant professor at Maastricht University.